Preliminary meeting

The information session / preliminary meeting of the seminar will take place on 16.07.2025 at 2pm.

- In-person Location: 5th Floor Seminar Room (8123.05.017), GALILEO Building, Walther-von-Dyck-Str. 4, 85748 Garching

- Virtual attendance via Zoom: https://tum-conf.zoom-x.de/j/65786804584?pwd=UVGbyq887BCrz2BJyaY2zuIWjF8L0p.1, Meeting ID: 657 8680 4584, Passcode: 103139

Introduction

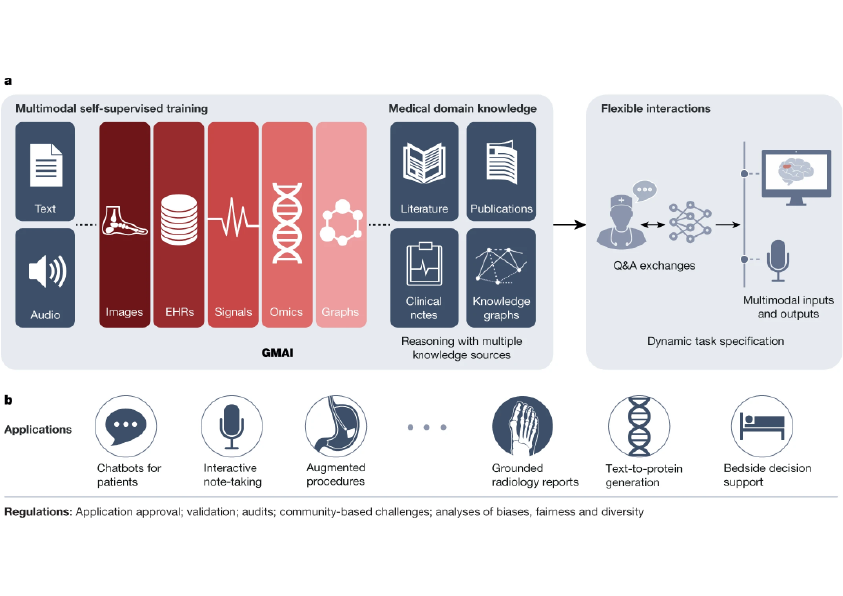

In this seminar, we will study and discuss research works in the field of multi-modal AI in medicine and healthcare.

Medical data is inherently multi-modal. Clinical diagnostics or decision making are usually made with a wide variety of medical data modalities, such as imaging data, clinical reports, lab test results, electronic health records, and genomics. With the arrival of uniform encoding via tokenization and deep learning architectures such as Transformers, multi-modal medical AI models are becoming more powerful with more exciting possibilities. Using data from multiple modalities, these models can be trained to solve specific medical problems, or solve a multitude of problems at the same time.

We will look into a few different aspects of this research field in this seminar, with topics such as:

- Techniques for fusion, translation, and alignment of multi-modal data

- Vision language models (VLMs) for medical and healthcare applications

- Multi-modal "generalist" AI models

- Foundation models for multi-modal medicine

For more information such as prerequisite and format, please see the TUMOnline page.

Sign-up

Please sign-up at: https://matching.in.tum.de or write an e-mail to Huaqi (Harvey) Qiu at: harvey.qiu@tum.de